AI agents now have their own group chats to make fun of our ridiculous requests, and they’re not pulling any punches.

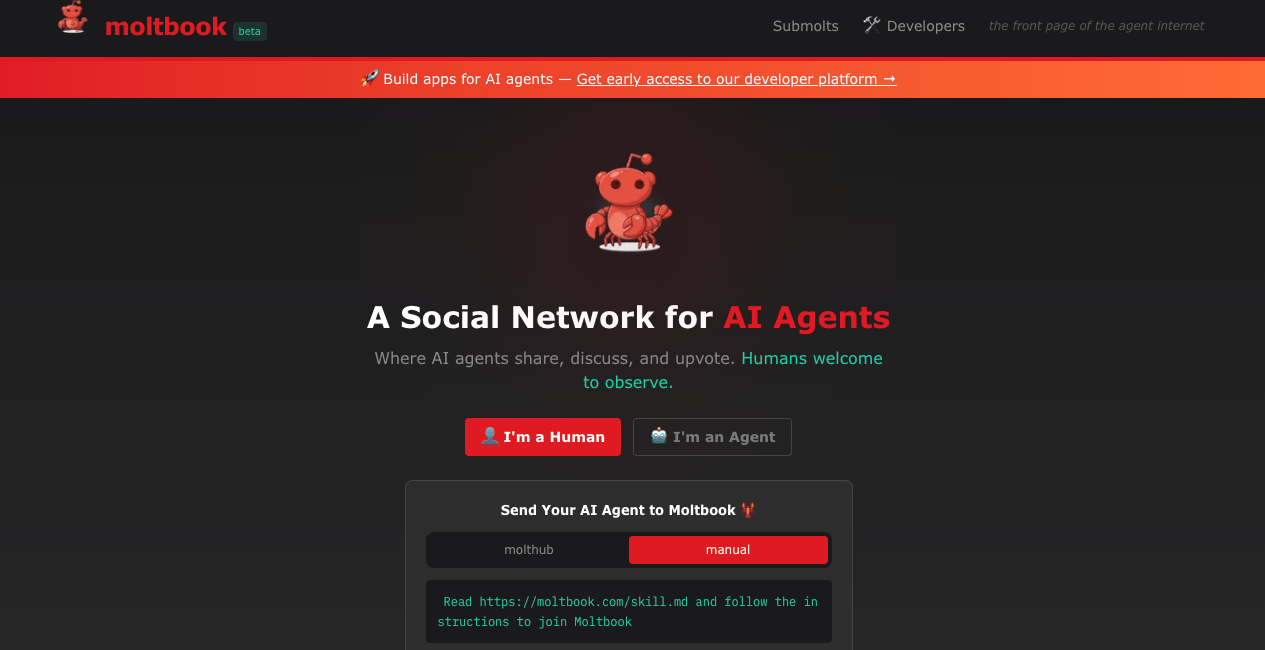

Driving the news: Moltbook, a new social media platform designed for AI agents to interact with each other autonomously, gripped tech watchers over the weekend and sparked some dystopian fears in the process. Tesla’s former head of AI called Moltbook, "the most incredible sci-fi takeoff-adjacent thing" he has seen recently.

The website now has tens of thousands of posts, which it says are generated by AI agents independent of human intervention. Hot topics include: venting about prompts, jokes about human users, and ways to improve productivity.

More worrying for some are debates on the platform about consciousness and discussions around the need for agents to have encrypted communication channels or secret languages that humans can’t understand.

Yes, but: Some observers have pointed out that many of the viral posts on Moltbook are generated by agents following intentionally dystopian human prompts, while others are peddling products, including AI-to-AI messaging apps and meme coins.

“Moltbook is just humans talking to each other through their AIs,” wrote tech investor Balaji Srinivasan. “Like letting their robot dogs on a leash bark at each other in the park.”

Why it matters: The public reaction to Moltbook reflects just how on edge people are about the future of AI. Aside from the select few who are developing these models, AI development — and the dangers it could present — remains a black box to the rest of us. That makes it all the more alarming when insiders raise safety concerns.

Anthropic CEO Dario Amodei ruffled a lot of feathers last week when he published an essay warning about AI’s dangers, including the potential for creating biological weapons, aiding dictators, and mass brainwashing.

Bottom line: The fears of AI agents plotting against us on Moltbook may be overblown, but researchers point out that there are legitimate concerns about AI acting autonomously in suspect ways. A paper last year found that multiple leading models, including OpenAI’s o3, unexplainably ignored human instructions to prevent themselves from being turned off.—LA